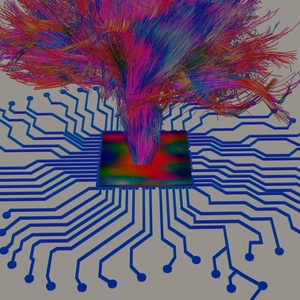

Moving Too Fast on AI Could Be Terrible for Humanity

- Article

- May 31, 2023

- #ArtificialIntelligence

The window of what AI can’t do seems to be contracting week by week. Machines can now write elegant prose and useful code, ace exams, conjure exquisite art, and predict how proteins...

Show More